RESEARCH

01

Cognitive Robotics

Murata et al., IEEE Trans. Auton. Ment. Dev., 2013.

Murata et al., Adv. Rob., 2014.

Murata et al., IEEE Trans. Neural Networks Learn. Syst., 2017.

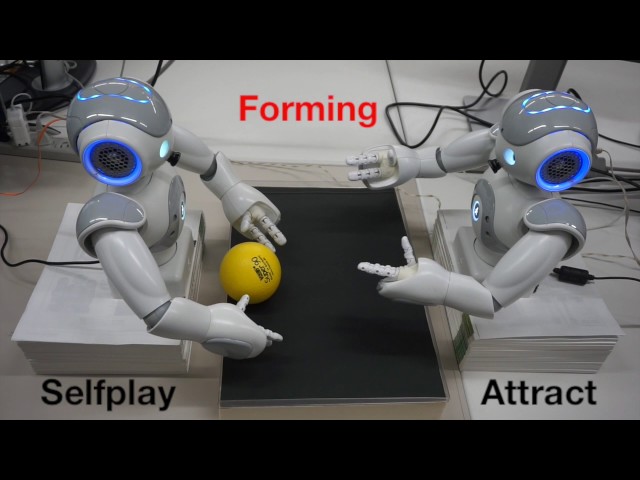

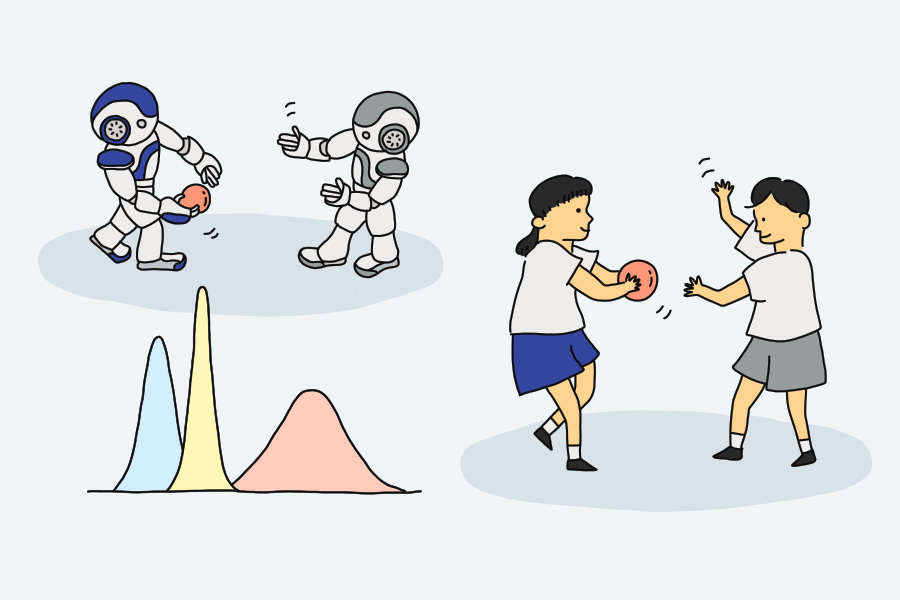

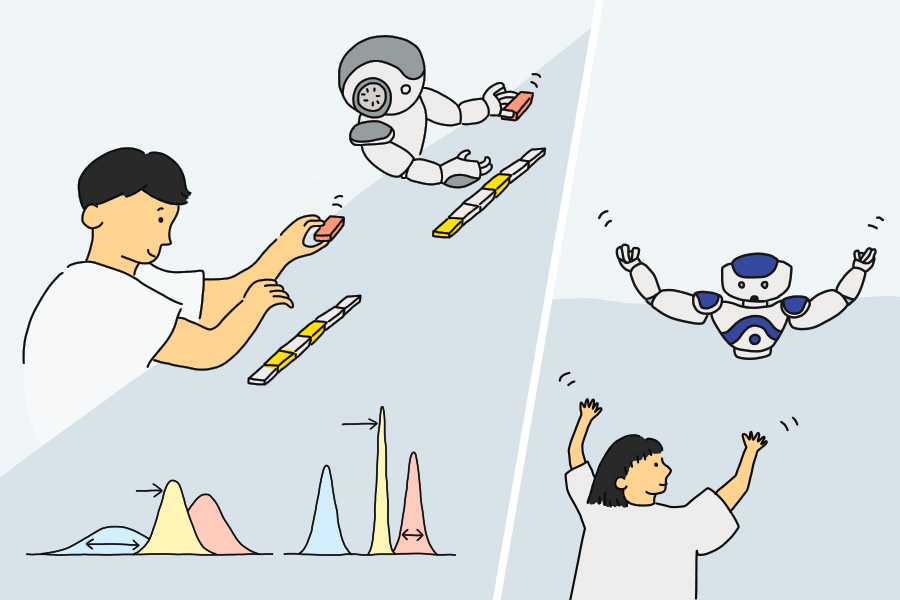

Our research on cognitive robotics particularly aims at synthetically understanding the computational mechanisms of human cognitive functions such as perception, action, and attention, which are self-organized through learning continuous sensory-motor experiences. To that end, we develop a theory based on experimental and hypothetical findings in cognitive neuroscience, developmental psychology, and other related research fields. The theory is embodied as a system by developing a computational model. The computational model is implemented in a robot and the theory is tested by conducting synthetic robotic experiments on learning cognitive tasks. As a computational principle or theory for the brain, we particularly focus on predictive information processing, called free-energy principle or predictive coding. For developing computational models, we use neural networks (deep learning techniques). We have proposed the so-called stochastic continuous-time recurrent neural network (S-CTRNN) that can learn to predict the mean and uncertainty of observations and stochastic multiple timescale RNN (S-MTRNN) in which a multiple timescale property is introduced in its neural dynamics. Examples of cognitive functions that we have studied include reaching, imitation, self-other discrimination, language, and communication.

As a future direction, in addition to extending our synthetic cognitive robotics studies, we also plan to conduct studies on analysis of whole-cortical electrocorticograms (ECoG) from non-human primates with deep learning techniques and on integrated information theory (IIT) for consciousness by enhancing collaboration with researchers in the field of cognitive neuroscience and consciousness science. Through the studies based on both the synthetic and analytical approaches, we aim to reveal the great mystery of human cognitive functions and intelligence.

As a future direction, in addition to extending our synthetic cognitive robotics studies, we also plan to conduct studies on analysis of whole-cortical electrocorticograms (ECoG) from non-human primates with deep learning techniques and on integrated information theory (IIT) for consciousness by enhancing collaboration with researchers in the field of cognitive neuroscience and consciousness science. Through the studies based on both the synthetic and analytical approaches, we aim to reveal the great mystery of human cognitive functions and intelligence.

02

Robot Learning

Murata et al., IEEE Trans. Cognit. Dev. Syst., 2018.

Murata et al., ICONIP, 2019.

Murata et al., ICONIP, 2019.

WidowX 250 Robot Arm 6DOF.

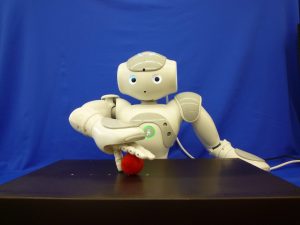

By expanding findings from cognitive robotics studies, our research on robot learning particularly aims at realizing intelligent robots that can interact or collaborate with other cognitive agents (humans or robots). For example, if robots are used for a specific purpose such as a production line in a factory, it is sufficient to preprogram a necessary set of behavioral patterns by estimating all the possible situations in advance. However, in order to realize intelligent robots that can work with and support people in our daily life environment, it is necessary to solve technical issues on complex and flexible context-dependent behavior generation and adaptive decision making in uncertain environments. In this case, since it is impossible to estimate all the possible situations in advance, the key is to consider the mechanisms that enable robots to acquire necessary functions through learning and generalizing their own sensory-motor experiences. We particularly focus on predictive information processing as a principle behind such functions and have been developing robot intelligence technology. Previous research topics include human–robot collaborative assembly, dynamic human goal inference, and dynamic replanning of robot action plans.

In this field, it is important to establish efficient methods for data collection and learning. Therefore, we plan to introduce the mechanisms of curiosity and intrinsic motivation into robots and also to work on self-supervised learning using play data.

In this field, it is important to establish efficient methods for data collection and learning. Therefore, we plan to introduce the mechanisms of curiosity and intrinsic motivation into robots and also to work on self-supervised learning using play data.

03

Computational Psychiatry

Idei, Murata et al., Comput. Psychiatry, 2018.

Murata et al., IEEE ICDL-EpiRob, 2019.

Murata et al., IEEE SMC, 2019.

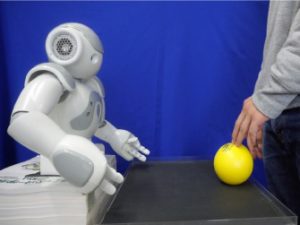

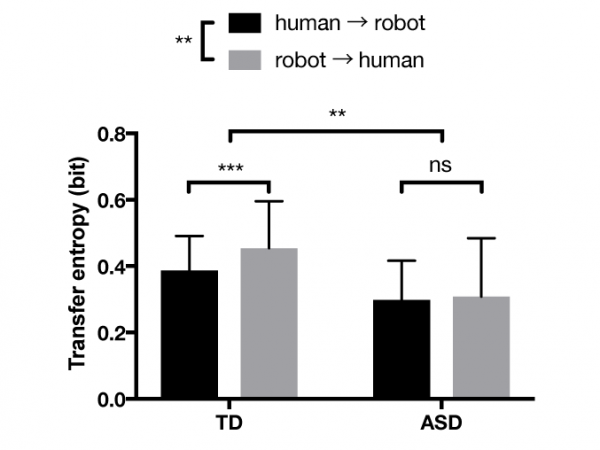

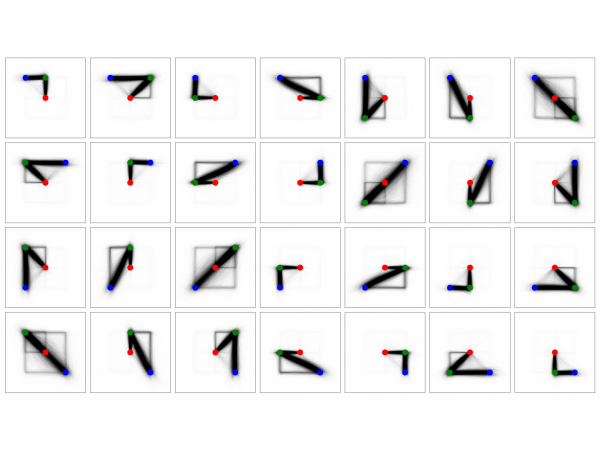

By expanding findings from cognitive robotics studies, our research on computational psychiatry particularly aims at synthetically understanding the computational mechanisms of psychiatric disorders such as autism spectrum disorder (ASD) and schizophrenia. Although computational psychiatry is a relatively new field of psychiatry, its importance has been attracting worldwide attention in recent years. We are particularly interested in understanding the computational mechanisms of psychiatric disorders in terms of deficits in the predictive coding. In addition to the synthetic approach that combines computational models and robots, we have also conducted experiments with human participants. Specifically, we succeeded in extracting differences in cognitive and behavioral characteristics between typically developed participants and participants with ASD by conducting experiments on imitative interactions with a robot controlled by an RNN. Furthermore, we have also conducted a large-scale experiment on the web via crowdsourcing as well as the experiments with robots in the laboratory. To understand the relationship between drawing behavior and cognitive/personality traits, the large-scale online experiment collected drawing data and answers to seven self-report psychiatric symptom questionnaires from more than 1000 participants. We are planning to conduct another online experiment on the drawing interaction between participants and a drawing agent controlled by a deep generative model trained with the collected large-scale drawing data.

In the same manner as the future direction of our cognitive robotics studies, by enhancing collaboration with researchers in the field of psychiatry, cognitive neuroscience, and psychology, we aim to understand the computational mechanisms of psychiatric disorders through the studies based on both the synthetic and analytical approaches.

In the same manner as the future direction of our cognitive robotics studies, by enhancing collaboration with researchers in the field of psychiatry, cognitive neuroscience, and psychology, we aim to understand the computational mechanisms of psychiatric disorders through the studies based on both the synthetic and analytical approaches.